ChatGPT and Memory: What's Changing

Chapter 1 - Speculations

Have you ever stopped to think about what would happen if ChatGPT remembered everything you said? Well, OpenAI's Memory feature is already active (with the exception of Europe, the UK, and a few other countries), and it raises a lot of interesting questions about the future of our interactions with AI. 🤔

I've started working on it, but I don't have all the elements to discuss it in depth yet. So, this first chapter of 'speculations' collects some initial thoughts on a topic that will surely keep attention high for the next few months (and beyond).

In fact, up until now, my favorite slide at the workshops was this one:

It remains true for LLMs: Once trained, they cannot receive any new information.

But around LLMs, there is now a world of software and technology that helps them keep a memory of a thousand things. And as I wrote only in January, everything around contributes to providing content within the context window of the model while we serenely chat.

What is the Memory Extended function?

Memory is a new ChatGPT feature launched by OpenAI that allows AI to remember information from past conversations and use it in future interactions. Unlike simple chat history, which keeps individual conversations separate, memory creates a persistent model for the user across all interactions.

ChatGPT can remember personal details, preferences, ongoing projects, and other shared information over time without the user repeating it in each new conversation. The system does not literally memorize every word exchanged. Still, it seems to create (there is no documentation, yet, about this) summaries and syntheses of the most relevant information, gradually building an understanding of the user.

Unlike the current Memory, which remembers some sentences and can be maintained by adding and removing memories, this one seems more like a muddy blob in which she remembers what she wants. For example, it remembers me asking her to forget about a useless conversation two years ago about an idea for a character in a book I was writing. And it promptly comes back to tell me, "Like the character, GIGI, that you asked me to forget."

While in the official demo, everything's smooth.

The only minimal technical docs as of today is this: help.openai.com/en/articles/8590148-memory-faq

Thankfully, this feature is optional and can be turned off anytime, allowing users to control what information they want the AI to remember or forget. As a good friend of mine says, “At least that!”

The substance

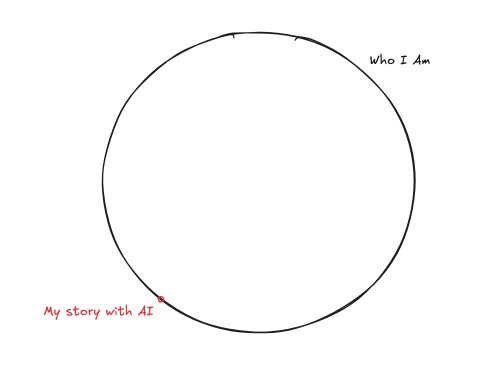

I have had over 5,000 conversations with ChatGPT (at least the ones I have saved). These conversations are not "me" in their entirety but fragments of my digital history—little puzzle pieces that show some aspect of me based on what I have written.

But what I told the AI is a small part of who I am and what I have told in my life.

It's not me.

The digital fragments of ourselves

Imagine having a friend who only knows you through your chats. This friend has never seen you in person and doesn't know the tone of your voice or how you gesture when you're excited. All he knows about you is what you've written to him.

When we write to ChatGPT or to the AI you like to use, we share only a select fraction of our experiences. We talk about projects, ask questions, and ask for advice, but this is just a tiny part of who we are. We don't share the emotions we feel watching a sunset, the spontaneous conversations with friends, or the fleeting thoughts that cross our minds during a walk. We don't tell her who we respect, who we can't stand, who we care about, and what we are indifferent about.

Even if OpenAI recorded our lives like in "The Truman Show," it could only observe what we do and infer some thoughts from what we ask. It's like watching a fish in a tank: we can see what it does but don't know what it thinks.

However, the problem is that we will assume that ChatGPT knows us better than anyone else.

The Dark Sides of Memory: A Muddy Blob of Personalization

As fascinating as it is, this feature risks generating an unmanageable blob of data, a muddy pile of information from which it will be almost impossible to understand what ChatGPT actually "sees" about us.

Of course, this process of partial knowledge and interpretation is no different from what happens with anyone who knows us. Still, there is a fundamental difference: ChatGPT is not a person. It has no human intuition, empathy, or the implicit sense of social context that drives human interactions. This blob of artificial memory could create unexpected problems: persistent misunderstandings, faulty inferences that crystallize over time, or awkward moments when AI unearths information in inappropriate contexts.

It may also influence responses in subtle and difficult-to-detect ways, steering care in directions we might not have consciously chosen.

The implications and interpretations

AI could make inferences based on what we said, creating connections we didn’t intend to make. It could generalize from specific or contextual information, building a model of us that doesn’t match reality.

If we mentioned feeling tired during an evening work session, the AI might label us as "people who work too much." If we ask about a topic out of curiosity or for a specific project, it might assume that the topic is a passion or area of expertise. In my case, it says that I ask about very different topics, and I return to the same questions over and over again. And I believe it! In workshops, I often repeat the same exercises, and these often have little or nothing to do with my work. ChatGPT probably thinks I have multiple personality disorders!

Privacy and sensitive data

What would happen when we share sensitive information with ChatGPT? What if this information concerns other people? Data management could become an increasingly sensitive issue. 🔒

We might casually mention details about colleagues, friends, or family members that, if remembered by the AI and presented in future contexts, could create embarrassing situations or nontrivial problems, especially if it were to hallucinate.

It's true: OpenAI already has them available on its servers (certainly well protected) but they have never been used to make inferences on the characteristics of those who use them. However, in the muddy blob it will be very difficult to label them. And understand how models use them.

Over-adaptation

ChatGPT could become so adapted to our style that it would stop challenging us intellectually. It could develop a sort of "filter bubble" around us, limiting the diversity of perspectives offered.

If AI noticed that we tend to agree with a specific type of argument, it might start to favor that type of reasoning, depriving us of alternative viewpoints that could enrich our thinking.

If he realized that some answers bother us, he might stop giving them, but just because they bother us doesn't mean they aren't helpful.

There are other side effects, but I wanted to write while it's still hot, and I'll stop here with the negative aspects. Let's move on.

ChatGPT's memory (and soon other players') could bring with it several interesting aspects. Since I've been playing with generative AI I've activated at least ten different projects to control 'what it knows about me'. I got one of these, which I love a few days ago by connecting all my Obsidian, which contains EVERYTHING I'VE WRITTEN AND PUBLISHED (not emails) and ALL MY WHATSAPP, via MCP to Claude. I'm going wild there, but I have much control over the data and how and when it's used.

The potential benefits

Efficiency in conversations

No more repetitive explanations! We would no longer have to say, "As I mentioned to you last week..." because ChatGPT would remember the context. This could save time and energy.

Imagine working on a complex project that unfolds over months. Each new session could pick up exactly where we left off without having to recontextualize or summarize what has already been discussed without having to search for the chat where we discussed it (an incredibly annoying, challenging, and ineffective task).

Continuity in dialogues

We could pick up a conversation days or weeks later, and the AI would know exactly what we discussed. This would allow for more natural and fluid conversations, similar to those we have with people who know our background.

"Salt..." (IYKYK)

Growing customization

With each interaction, ChatGPT could refine its understanding of our tastes, preferences, and needs. The AI could implicitly adapt to our communication style, interests, and priorities. ☕

This personalization could manifest itself in subtle ways: the tone of responses, the level of detail provided, and the types of examples used. AI could develop a “theory of mind” about us, anticipating what we would find useful or interesting.

With a side effect And use it, for example, to filter what you search for on the web to become the new digital profile.

How could we manage this new digital relationship?

With memory active, we should think differently about our interactions:

Select what we would like to be remembered

Some conversations may affect your memory in ways you don't want. In my case, with over 5,000 conversations, it would be difficult to know which ones to keep and which to delete.

We may need to develop a metacognitive awareness of our interactions: Which conversations do we want to contribute to the AI’s “memory” of us, and which should be considered exploratory, temporary, or out of context?

Starting from scratch

A drastic solution? Erase everything and start over. Sometimes, a fresh start might be the easiest way to stay in control. 🔄 (In fact, I'll probably have to erase everything and start over, maybe do some Vibe Coding and keep the archive available on Obsidian, along with everything I've written.)

This option raises interesting questions about the nature of our digital relationships. How often should we “reset” these relationships? Is it comparable to how, in real life, relationships evolve naturally through periods of more or less contact?

What might he forget, what he remember

Artificial memory will not work like human memory. From what I have seen so far it does not remember everything perfectly, but rather creates summaries and syntheses based on recurring patterns according to 'sieves' that only OpenAI algorithms know.

You may be more likely to remember general themes, recurring interests, and stylistic preferences rather than specific details from individual conversations.

But as mentioned above this "impressionistic" memory could be both an advantage and a limitation that contributes to the Muddy Blob.

So, what

ChatGPT’s memory is an interesting step toward more personal and contextualized AI assistants. We are facing a new digital relationship that is neither completely instrumental nor completely personal. And that brings us more and more toward the cognitive delegation I often talk about, where we outsource not only the ability to reason but also to remember.

What will happen when ChatGPT tells us: look, you prefer chocolate cake, not apple cake, is evident from what we have said to each other over the years.

This evolution of AI tools raises fundamental questions about how we manage our digital identities, how much we are willing to reveal about ourselves to automated systems, and how these systems might shape our future experiences.

The answers to these questions are not yet clear, and are part of the long-term questions whose answers will emerge through a process of experimentation, reflection and adaptation by all of us who use these technologies.

As we venture into this new territory, it becomes important to maintain an attitude of conscious curiosity: to explore the possibilities offered by artificial memory without forgetting that control over what we share and how we are represented should remain in our hands.

But for now, as a curious experimenter, I have decided to leave this feature active and test it out in the field. In a few weeks, I will return with more details, concrete observations, and, perhaps, a few surprises on how this artificial memory is shaping my digital and human experience.

See you soon!

Max